AI Literacy 101

Congressional Briefing

Ariel Fogel

November 5, 2025

About Me (Ariel Fogel)

- Previously: Health policy in DC, EdTech in Bay Area, MS Learning Sciences

About Me (Ariel Fogel)

- Previously: Health policy in DC, EdTech in Bay Area, MS Learning Sciences

- Supply Chain Attack at OWASP Global AppSec

Pillar Security

- Develop runtime guardrails

- Discover AI assets

- Evaluate posture risk

- Conduct AI red-teaming against live systems

We need system-level governance, not just model regulation

AI risk has two distinct layers

Two Layers of AI Risk

- Model-Level (Provider-Level) Risk

Two Layers of AI Risk

- Model-Level (Provider-Level) Risk

- System-Level (Adoption-Level) Risk

Current debates focus on model-level risk

- Fairness and bias in outputs

Current debates focus on model-level risk

- Fairness and bias in outputs

- Regulatory sandboxes and experimentation frameworks

What's missing: system-level governance

- Integration safety isn't tested in sandboxes

What's missing: system-level governance

- Integration safety isn't tested in sandboxes

- AI systems are dynamic—risk evolves with connectivity

We need to expand the conversation

- From model regulation to system-level governance

What we'll cover in this briefing

- How LLMs actually work (and why they hallucinate)

What we'll cover in this briefing

- How LLMs actually work (and why they hallucinate)

- The three system types and their risk profiles

What we'll cover in this briefing

- How LLMs actually work (and why they hallucinate)

- The three system types and their risk profiles

- Real-world attack patterns and failures

What we'll cover in this briefing

- How LLMs actually work (and why they hallucinate)

- The three system types and their risk profiles

- Real-world attack patterns and failures

- Practical controls and governance frameworks

How LLMs Actually Work

The training process: learning from scale

- Models learn statistical patterns from massive text corpora

The training process: learning from scale

- Models learn statistical patterns from massive text corpora

- Training transforms billions of documents into distributed weights

The training process: learning from scale

- Models learn statistical patterns from massive text corpora

- Training transforms billions of documents into distributed weights

- Scale matters: more data + bigger models = better performance

Scaling from research to practice

GPT-1 (2018)

Scaling from research to practice

GPT-1 (2018)

GPT-2 (2019)

Scaling from research to practice

GPT-1 (2018)

GPT-2 (2019)

GPT-3 (May 2020)

Scaling from research to practice

GPT-1 (2018)

GPT-2 (2019)

GPT-3 (May 2020)

GPT-3.5 (Late 2022)

ChatGPT launch • Mass consumer adoption

Why we can't just audit the training data

- Training data: hundreds of billions of tokens from web, books, code, forums

Why we can't just audit the training data

- Training data: hundreds of billions of tokens from web, books, code, forums

- Models learn distributed patterns, not indexed facts tied to documents

Why we can't just audit the training data

- Training data: hundreds of billions of tokens from web, books, code, forums

- Models learn distributed patterns, not indexed facts tied to documents

- Tracing influence of individual documents is infeasible

Why we can't just "delete" problematic knowledge

- Knowledge is statistically embedded across billions of weights

Why we can't just "delete" problematic knowledge

- Knowledge is statistically embedded across billions of weights

- Can't surgically remove specific facts without retraining

Why we can't just "delete" problematic knowledge

- Knowledge is statistically embedded across billions of weights

- Can't surgically remove specific facts without retraining

- Dataset includes full spectrum of human expression—good and harmful

LLMs calculate probabilities, not facts

- Trained on billions of text examples

LLMs calculate probabilities, not facts

- Trained on billions of text examples

- For each word: generates probability scores

LLMs calculate probabilities, not facts

- Trained on billions of text examples

- For each word: generates probability scores

- Selects based on likelihood, not truth

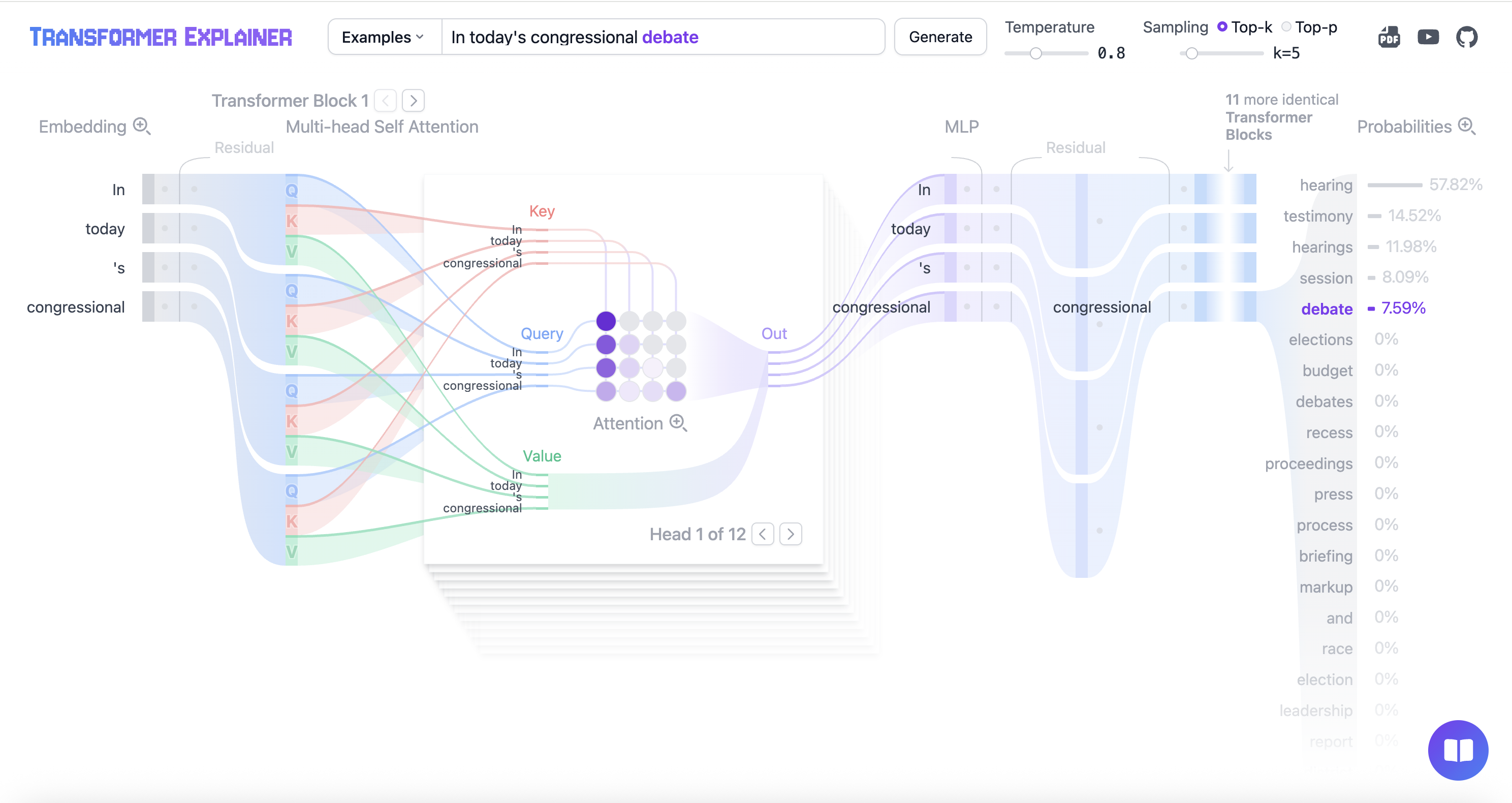

Let's watch an LLM in action

Prompt: "In today's congressional…"

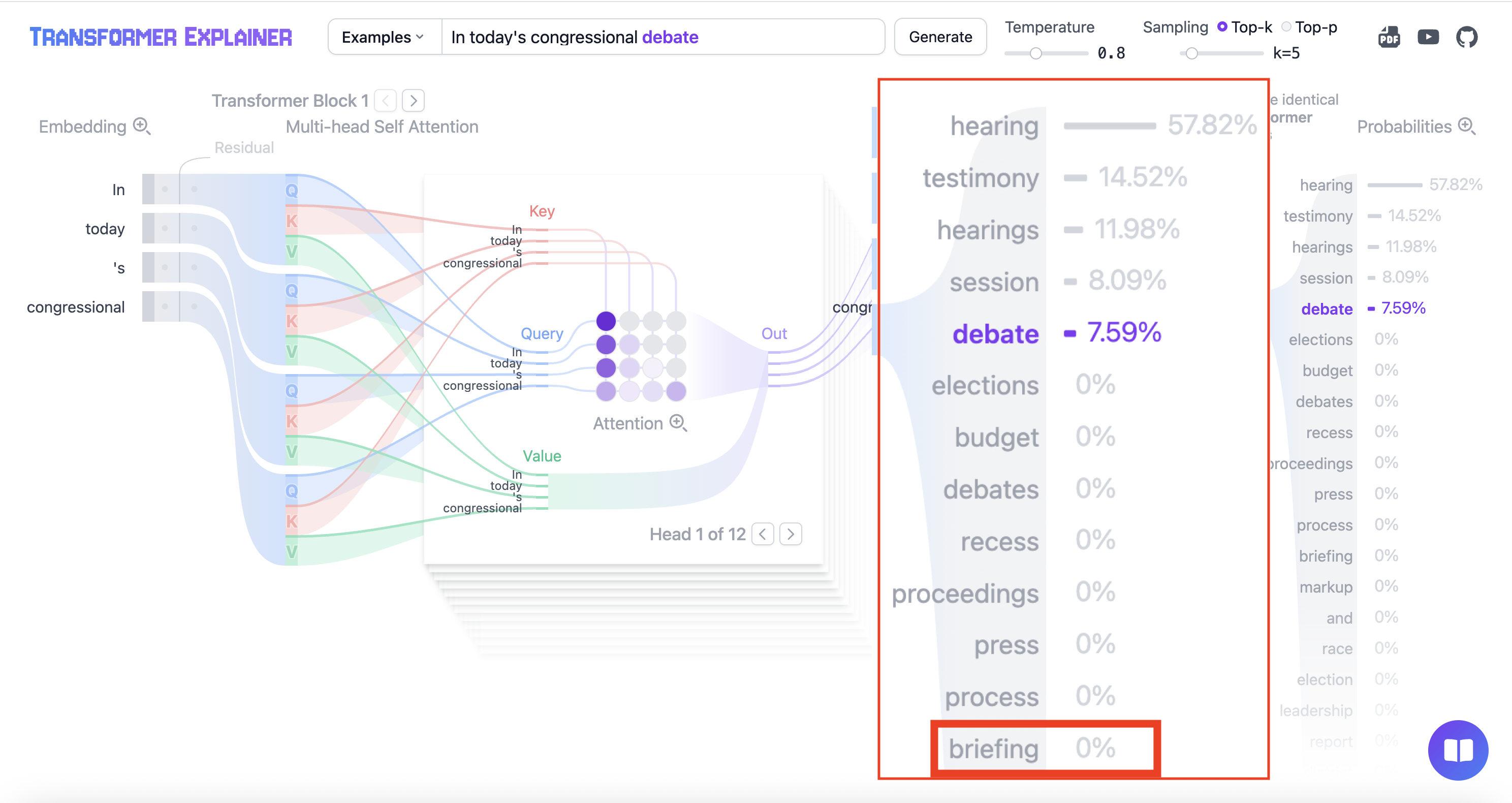

The model picked "debate"

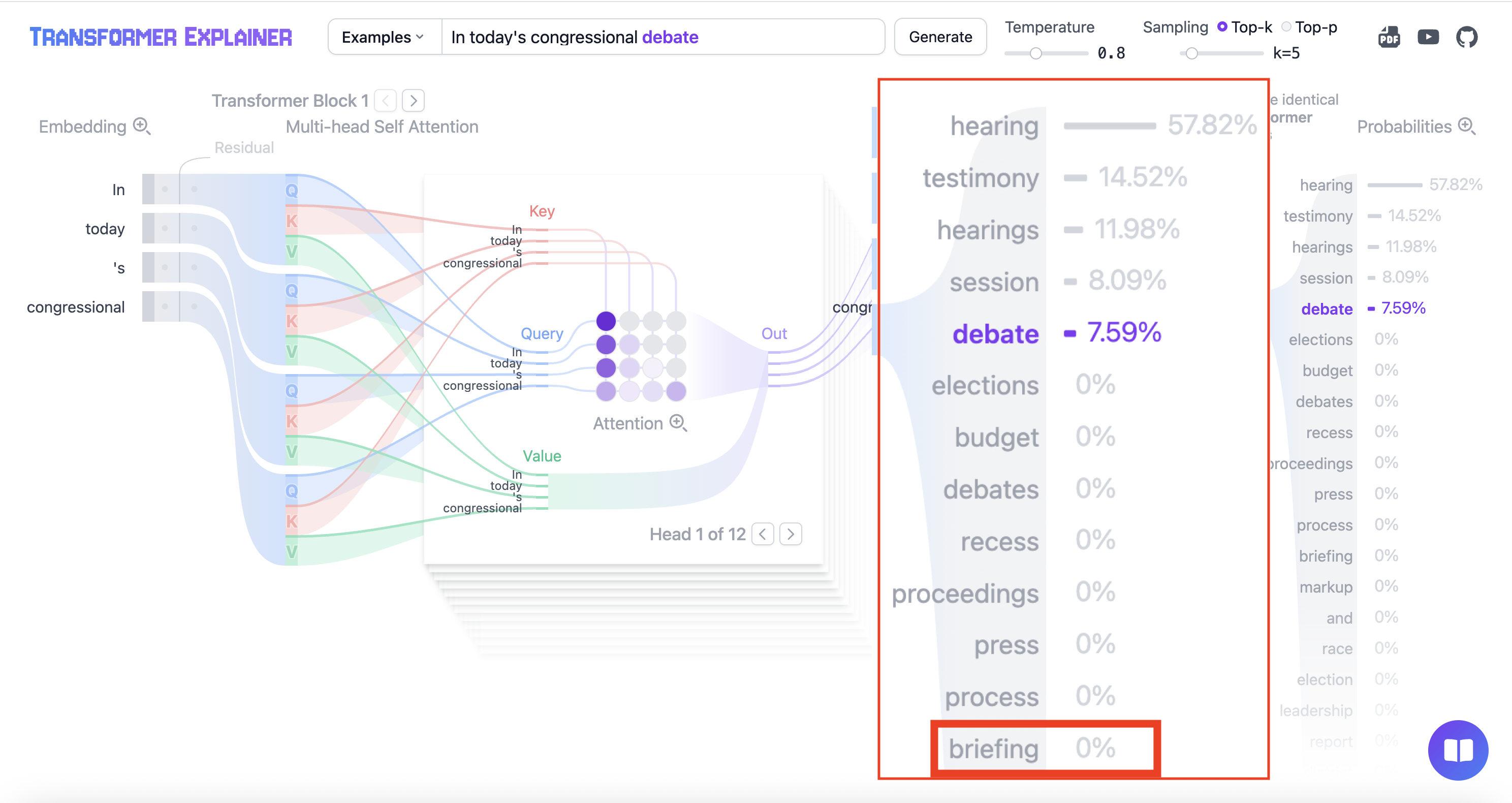

But "briefing" got 0% probability

But "briefing" got 0% probability

Every word follows this same process

- Calculate probabilities

Every word follows this same process

- Calculate probabilities

- Select from the distribution

Every word follows this same process

- Calculate probabilities

- Select from the distribution

- Move to next word

Every word follows this same process

- Calculate probabilities

- Select from the distribution

- Move to next word

- Common patterns ≠ true facts

"Hallucination" is a feature, not a bug

- Models always produce an answer

"Hallucination" is a feature, not a bug

- Models always produce an answer

- Low confidence → picks from worse options

"Hallucination" is a feature, not a bug

- Models always produce an answer

- Low confidence → picks from worse options

- Can't be "fixed" without changing what LLMs are

Without external connections, LLMs are just text generators

Safety vs. Security

Understanding risk through system architecture

Safety Breaks Guardrails—Security Hijacks Control

Safety

Breaking guardrails

Getting models to do what they're trained not to do

Safety Breaks Guardrails—Security Hijacks Control

Safety

Breaking guardrails

Getting models to do what they're trained not to do

Security

Hijacking control flow

Extracting privileged data or taking unauthorized actions

Security Violations Cause Material Financial Harm

- Safety: Consumer harm, compliance issues

Security Violations Cause Material Financial Harm

- Safety: Consumer harm, compliance issues

- Security: Data breaches, unauthorized transactions, system compromise

Security Violations Cause Material Financial Harm

- Safety: Consumer harm, compliance issues

- Security: Data breaches, unauthorized transactions, system compromise

- Regulation focuses on system architecture, not content moderation

Architecture 1: Standalone LLM

Not connected to external data or tools

Standalone LLM: Primary Risks

- Bypassing safety guardrails

Standalone LLM: Primary Risks

- Bypassing safety guardrails

- Misinformation

Standalone LLM: Primary Risks

- Bypassing safety guardrails

- Misinformation

- Over-reliance—users trusting incorrect outputs

Architecture 2: RAG

Retrieval-Augmented Generation: Connected to knowledge bases

Architecture 2: RAG

Architecture 2: RAG

RAG Systems Create New Attack Vectors

- Still: Overreliance and jailbreaking

RAG Systems Create New Attack Vectors

- Still: Overreliance and jailbreaking

- Context poisoning—malicious documents in the LLM context

RAG Systems Create New Attack Vectors

- Still: Overreliance and jailbreaking

- Context poisoning—malicious documents in the LLM context

- Prompt injection

Lethal Trifecta

When is a RAG System Susceptible?

- It contains private data

- It processes untrusted content

- It can communicate externally

Architecture 3: Agents

- LLMs connected to external "tools"

- Invoking tools has real-world actions

Architecture 3: Agents

- 1. User Request

Architecture 3: Agents

- 1. User Request

- 2. Invoke tools if applicable

Architecture 3: Agents

- 1. User Request

- 2. Invoke tools if applicable

- 3. Evaluate tool response

Architecture 3: Agents

- 1. User Request

- 2. Invoke tools if applicable

- 3. Evaluate tool response

- 4. Loop

Architecture 3: Agents

Architecture 3: Agents

Architecture 3: Agents

Meta's "Agents Rule of Two"

Meta AI publicly endorsed this framework (October 31, 2025)

- Based on Simon Willison's "lethal trifecta" and Chromium's security policy

- Core principle: Until prompt injection is solved, agents must have no more than 2 of 3 properties

- Major AI labs are converging on system-level containment

Can We Mitigate These Risks?

Yes — through architectural design patterns

Secure By Design

Prompt Injection Is An Enduring Risk

- LLMs are designed to follow instructions—that's their core capability

Prompt Injection Is An Enduring Risk

- LLMs are designed to follow instructions—that's their core capability

- No reliable way to distinguish "trusted" vs. "untrusted" instructions at the token level

Contain the Damage Through Architecture

- Assume injection will occur

Contain the Damage Through Architecture

- Assume injection will occur

- Contain damage: isolation, least privilege, mandatory adjudication

Contain the Damage Through Architecture

- Assume injection will occur

- Contain damage: isolation, least privilege, mandatory adjudication

- Prevent external actions before validation

Key Takeaways

Models Predict. Systems Decide.

- LLMs are probabilistic—they predict patterns, not facts

Hallucination is inherent; reduce it with RAG/verification, not model size

Models Predict. Systems Decide.

- LLMs are probabilistic—they predict patterns, not facts

Hallucination is inherent; reduce it with RAG/verification, not model size - Reliability flows from system design

Boundaries, logs, approvals, evaluations—not the model alone

Security vs. Safety

- Safety issues matter for trust

But difficult to regulate how the model is constructed - Security vulnerabilities cause operational damage

More urgent and more amenable to operational controls and auditing

Security vs. Safety

- Safety issues matter for trust

But difficult to regulate how the model is constructed - Security vulnerabilities cause operational damage

More urgent and more amenable to operational controls and auditing - Regulatory attention should prioritize system-level security

Not because safety doesn't matter—because security is testable and enforceable

Prompt Injection

- Fundamental vulnerability in all LLMs

Can be contained architecturally—like SQL injection and other persistent threats

Prompt Injection

- Fundamental vulnerability in all LLMs

Can be contained architecturally—like SQL injection and other persistent threats - Enables data theft and unauthorized actions

Malicious instructions embedded in emails, web pages, uploads hijack behavior - Causes material harm in financial services

Unauthorized transfers, GLBA breaches, and compliance failures

The Lethal Trifecta: 15-Second Risk Diagnostic

Private Data

Customer PII, transactions, credentials

The Lethal Trifecta: 15-Second Risk Diagnostic

Private Data

Customer PII, transactions, credentials

Untrusted Content

Web, emails, user uploads, third-party feeds

The Lethal Trifecta: 15-Second Risk Diagnostic

Private Data

Customer PII, transactions, credentials

Untrusted Content

Web, emails, user uploads, third-party feeds

Exfiltration/Action

External APIs, email, payments, case writes

When the Trifecta Is Complete: Contain or Kill

If all three conditions are present:

- Containment is mandatory

Data/Command/Approval boundaries, pre-action adjudication,

immutable logs, red-team testing

When the Trifecta Is Complete: Contain or Kill

If all three conditions are present:

- Containment is mandatory

Data/Command/Approval boundaries, pre-action adjudication,

immutable logs, red-team testing - Or kill one leg of the trifecta

Remove private data access, isolate from untrusted content,

or block external actions

Three System Types Need Different Oversight

- Standalonelower risk

Three System Types Need Different Oversight

- Standalonelower risk

- RAGmedium risk

Three System Types Need Different Oversight

- Standalonelower risk

- RAGmedium risk

- Agentichigher risk

Safe Design Enables Safe Adoption

- Architectural controls make AI deployable

Breaking the lethal trifecta reduces risk from "system-compromising" to "manageable"

Questions?

Appendix: Reference Frameworks

OWASP LLM Top-10 (2025)

Common vocabulary for vendor diligence and exam readiness

- LLM01: Prompt Injection → Lethal Trifecta, Data Boundary, Security vs. Safety (Sections 3-4, 8)

- LLM06: Excessive Agency → Command Boundary, Tool Scopes, Circuit Breakers (Section 6)

- LLM08: Vector & Embedding Weaknesses → RAG Systems, Tenant Isolation (Section 4, 6)

- Resource: owasp.org/llm-top-10 (2025 PDF)

- How to use: Map vendor controls to LLM01/06/08; demand test results; request red-team reports

NIST AI Governance Stack

U.S. federal anchor for control mapping and measurement

- NIST 2025 Cyber AI Initiative → System-level lifecycle governance (CSF, SP 800-53 cross-walk)

- AI Control Overlays Concept → Map controls to generative/predictive, single/multi-agent systems

- 2025 GenAI Text Challenge → Evaluation structure (Generator/Prompter/Discriminator roles)

- How to use: Request control overlay mappings; ask for AI-BOM and system cards; cite NIST GenAI structure for eval protocols

Financial Services Supervisory Signals (2025)

Bipartisan cover for current-year oversight asks

- OCC Spring 2025 Semiannual Risk Perspective → Operational & third-party risk as GenAI scales

- Federal Reserve Governor Barr (April 4, 2025) → Getting bank risk management ready for GenAI

- CFPB Reg B §1002.9 → Adverse-action reason specificity and traceability (credit use cases)

- SEC (June 12, 2025) → Predictive Data Analytics conflicts proposal withdrawal (investor use cases)

- How to use: Cite in Congressional letters, oversight memos, vendor RFPs to ground asks in established authority

Adversarial & Incident Response Frameworks

Red-team planning and incident coordination expectations

- MITRE ATLAS → Threat-informed AI tactics lexicon (injection, poisoning, evasion, exfiltration)

- SAFE-AI 2025 Report → Control selection approach tailored to AI system types

- JCDC AI Playbook (January 2025) → Federal incident coordination baseline for AI incidents

- CISA AI Data Security Guidance → Data boundary best practices (allow-lists, signing, sanitization)

- How to use: Structure red-team exercises using ATLAS tactics; align incident response plans with JCDC Playbook

Global Alignment & Transparency Frameworks

International standards for capability claims and risk reporting

- OECD AI Capability Indicators (June 2025) → Objective measures for AI system capabilities (task performance, robustness, fairness, interpretability)

- G7 Hiroshima AI Process / OECD Reporting Framework (Feb 2025) → Transparency for advanced AI developers (governance, testing, incident response, public disclosure)

- How to use: Request OECD benchmark results from vendors; ask "Can you fill out the G7 framework?"

- Goal: Avoid conflating marketing hype with measured capability; demand evidence-based assessment

The Lethal Trifecta: 15-Second Risk Diagnostic

Private Data

- Customer PII

- Transaction records

- Account credentials

Untrusted Content

- Open web pages

- Inbound emails/PDFs

- User uploads

Exfiltration/Action

- External API calls

- Outbound email

- Payment initiation

- All three present? → Mandatory containment.

- Kill any one leg → Risk collapses.

Academic References: Scaling Laws and Model Performance

- Kaplan, J., McCandlish, S., Henighan, T., et al. (2020). Scaling Laws for Neural Language Models. OpenAI.

- Hoffmann, J., Borgeaud, S., Mensch, A., et al. (2022). Training Compute-Optimal Large Language Models. DeepMind.

- Brown, T.B., Mann, B., Ryder, N., et al. (2020). Language Models are Few-Shot Learners. OpenAI.

- Bubeck, S., Chandrasekaran, V., Eldan, R., et al. (2023). Sparks of Artificial General Intelligence: Early Experiments with GPT-4. Microsoft Research.

Academic References: Dataset Scale and Diversity

- Bender, E.M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? FAccT Conference.

- Wei, J., Tay, Y., Bommasani, R., et al. (2022). Emergent Abilities of Large Language Models. arXiv preprint arXiv:2206.07682.

- Bommasani, R., Hudson, D.A., Adeli, E., et al. (2021). On the Opportunities and Risks of Foundation Models. Stanford Center for Research on Foundation Models (CRFM).

Academic References: Embedded Knowledge and Unlearning

- Eldan, R., & Li, Y. (2023). Memorization in Transformers: Mechanisms and Data Attribution. OpenAI.

- Yao, Y., Sun, H., Cao, S., et al. (2023). Editing Large Language Models: Review, Challenges, and Future Directions.

- Ilharco, G., Wortsman, M., Hajishirzi, H., et al. (2023). Editing Models with Task Arithmetic.

- Carlini, N., Tramer, F., Wallace, E., et al. (2022–2024). Extracting Training Data from Large Language Models. Google Research.

Academic References: Auditing Training Data and Privacy

- Privacy Auditing for Large Language Models with Natural Identifiers. (2023). OpenReview.

https://openreview.net/pdf?id=jp4XlcpRIW - Perspective: Why Data Subjects' Rights to LLM Training Data Are Not Relevant. (2023). International

Association of Privacy Professionals (IAPP).

https://iapp.org/news/a/perspective-why-data-subjects-rights-to-llm-training-data-are-not-relevant

Academic References: Benchmarking and Scale Effects

-

Wikipedia contributors. (2025). GPT-3. In Wikipedia, The Free Encyclopedia.

https://en.wikipedia.org/wiki/GPT-3 -

Wikipedia contributors. (2025). MMLU (Massive Multitask Language Understanding benchmark). In Wikipedia, The Free Encyclopedia.

https://en.wikipedia.org/wiki/MMLU

Using These Frameworks in Oversight

- Vendor Diligence: Request mappings to OWASP Top-10, NIST overlays, OECD benchmarks, G7 reporting

- Exam Planning: Structure artifact requests around NIST/CISA/JCDC/OCC frameworks

- Red-Team Exercises: Use MITRE ATLAS tactics and SAFE-AI control selection

- Congressional Letters: Cite OCC, Fed, CFPB, SEC supervisory signals for bipartisan authority

- Incident Response: Align plans with JCDC AI Playbook; reference CISA guidance